Hi! I’m Baoxiong, a research scientist at BIGAI. I received my Ph.D in the Department of Computer Science, University of California, Los Angeles. My research interests lie in the intersection of computer vision, artificial intelligence, robotics and cognitive science, with a special focus on spatial/temporal reasoning and its application to acting and planning in real world (scene/activity understanding, future prediction, grounded manipulation, etc.). My recent works focuses on integrating all previous research into humanoid robots and make them helpful when I’m old :-)

Previously, I obtained my M.S. from UCLA in 2019 and B.S. from Peking University in 2018.

Info: Email / Google Scholar / CV /

News New Our paper COLA on Human-Humanoid Collaboration is accepted by ICRA 2026, check it out! New Our paper SceneCOT on CoT-Reasoning in 3D scenes is accepted by ICLR 2026, check it out! New Invited tutorial at EIS 2025 hosted by ACM SIGEMBED China, checkout the slides ! New Invited talk at EAIRCon 2025 on 3D Gaussian World Models, checktout the slides ! 2025/10 SceneWeaver receives the Best Paper at RoboGen@IROS25 , checkout the slides and talk (EN) ! 2025/10 We won the first place at the IROS 25 UniTree Dancing Challenge! 2025/10 RoboVerse receives the Best Open-source Award at RoboGen@IROS25 ! 2025/10 Invited talk at HKU and 3DCVer on UniFP and COLA , checktout the slides and talk (CN) ! 2025/09 UniFP receives the Best Paper Award at CoRL 2025! Oral talk available here ! 2025/09 One paper on Agentic 3D Scene Generation is accepted by NeurIPS 2025. 2025/08 We won the champion of humanoid dancing at World Humanoid Robot Games (WHRG) ! 2025/06 One paper on Unified Force and Position Control is accepted by CoRL 2025 as Oral ! 2025/06 Two papers on 4D World Model and Embodied Vision Language are accepted by ICCV 2025! 2025/06 I’m co-organizing the 5th 3D Scene Understanding workshop at CVPR 2025. See you in Nashvile! 2025/04 RoboVerse is accepted by RSS 2025! Go check it out here ! 2025/03 I recently gave a summary of our work at BostonDynamics. Checktout the slides ! 2025/02 Four papers on 3D Scene Understanding and Reconstruction are accepted by CVPR 2025! 2025/01 Two papers on Mobile Manipulation and Articulated Part Generation are accepted by ICRA 2025! 2025/01 One paper on Articulated Object Reconstruction is accepted by ICLR 2025! Learning Human-Humanoid Coordination for Collaborative Object Carrying International Conference on Robotics and Automation (ICRA) 2026 (* indicates equal contribution. ✉ indicates corresponding author.)

SceneCOT: Eliciting Grounded Chain-of-Thought Reasoning in 3D Scenes International Conference on Learning Representations (ICLR) 2026 (✉ indicates corresponding author.)

SceneWeaver: All-in-One 3D Scene Synthesis with an Extensible and Self-Reflective Agent Advances in Neural Information Processing Systems (NeurIPS) 2025 ( RoboGen@IROS 2025 Best Paper Award ) (* indicates equal contribution. ✉ indicates corresponding author.)

Learning Unified Force and Position Control for Legged Loco-Manipulation Conference on Robot Learning (CoRL) 2025 ( Best Paper Award ) (* indicates equal contribution. ✉ indicates corresponding author.)

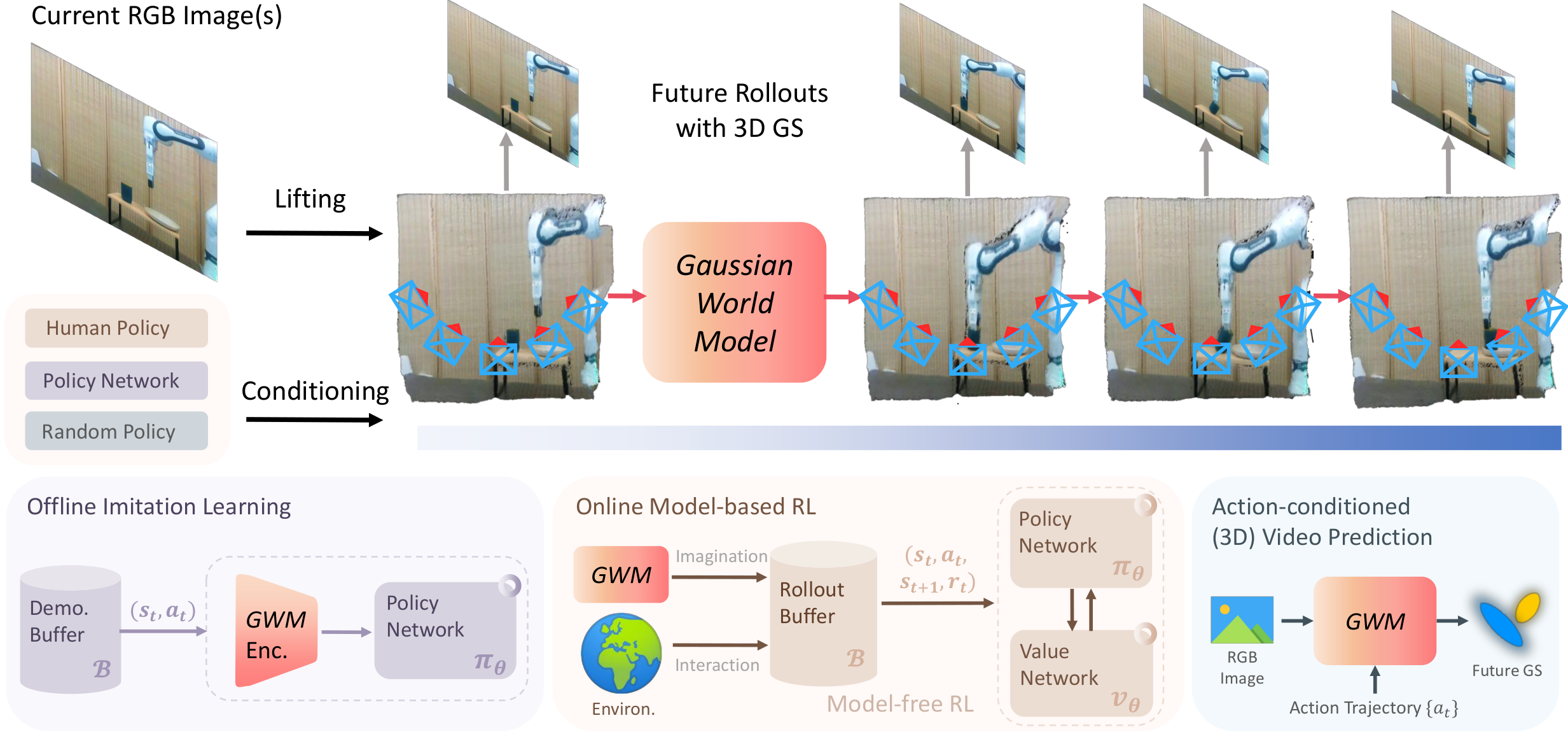

GWM: Toward Scalable Gaussian World Models for Robotic Manipulation International Conference on Computer Vision (ICCV) 2025 (* indicates equal contribution. ✉ indicates corresponding author.)

MetaScenes: Towards Automated Replica Creation for Real-world 3D Scans Huangyue Yu* ,

Baoxiong Jia* ,

Yixin Chen* ,

Yandan Yang ,

Puhao Li ,

Rongpeng Su ,

Jiaxin Li ,

Qing Li ,

,

Song-Chun Zhu ,

Tengyu Liu ,

Siyuan Huang .

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2025 (* indicates equal contribution.)

Unveiling the Mist over 3D Vision-Language Understanding: Object-centric Evaluation with Chain-of-Analysis IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2025 (* indicates equal contribution.)

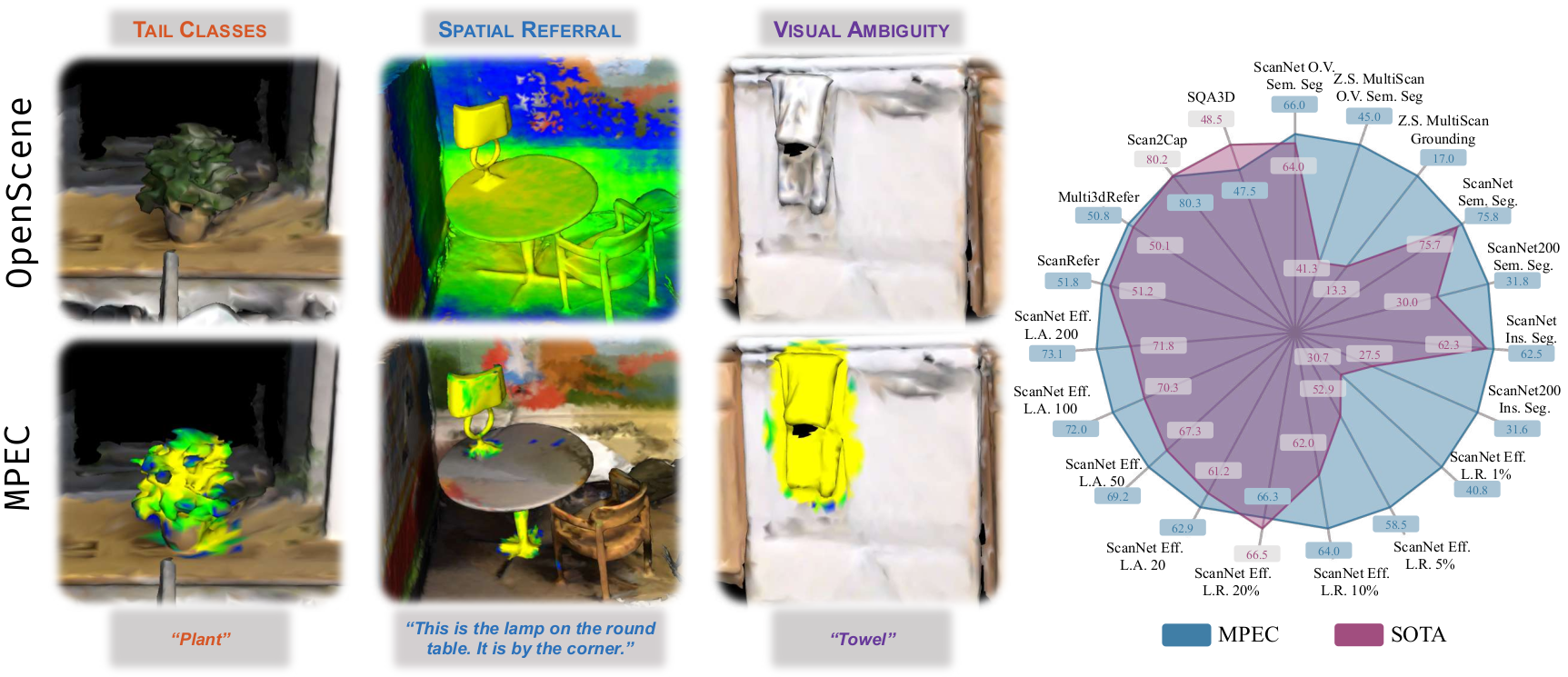

Masked Point-Entity Contrast for Open-Vocabulary 3D Scene Understanding IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2025 (* indicates equal contribution.)

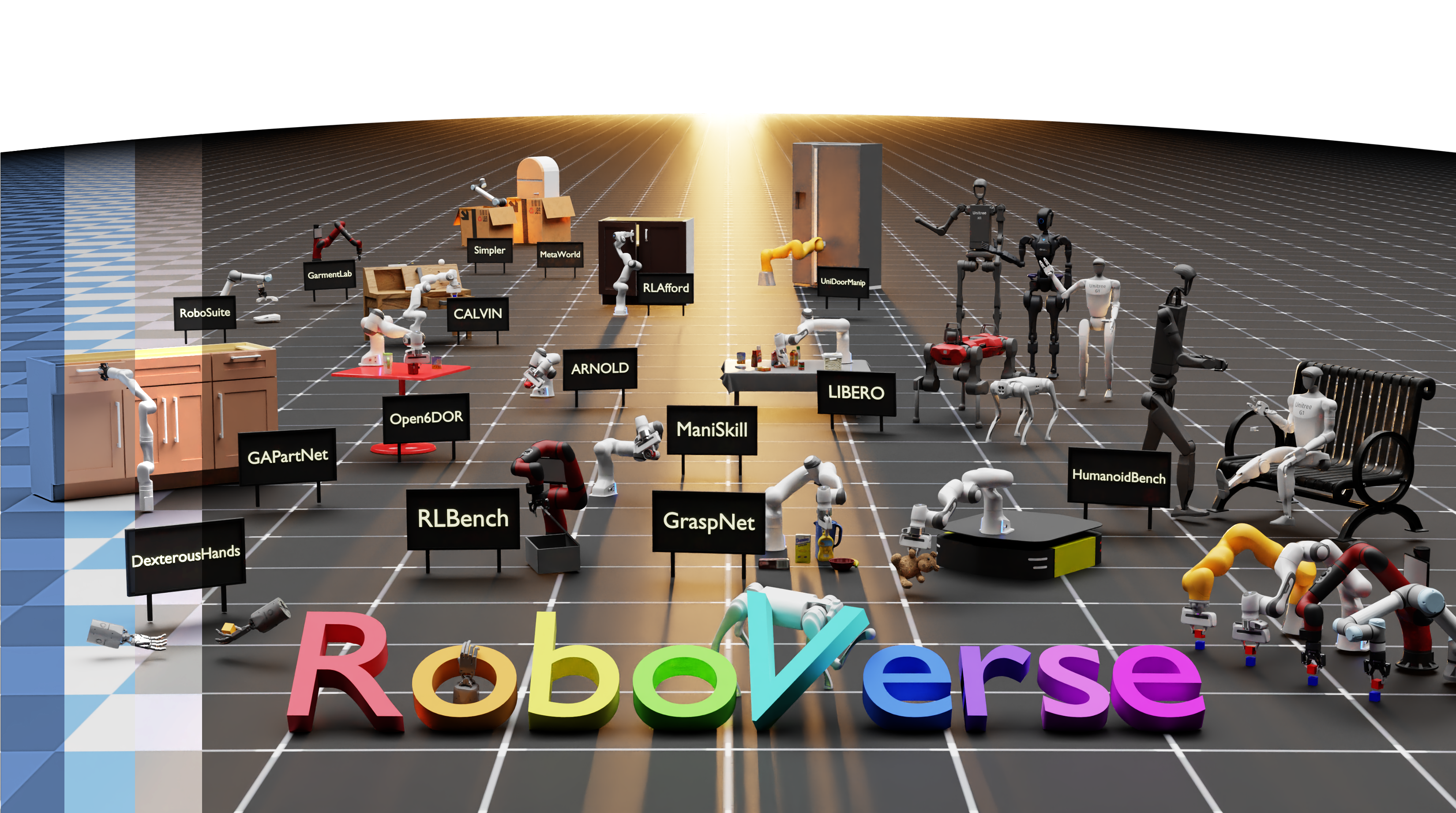

RoboVerse: Towards a Unified Platform, Dataset and Benchmark for Scalable and Generalizable Robot Learning Haoran Geng* ,

Feishi Wang* ,

Songlin Wei* ,

Yuyang Li* ,

Bangjun Wang* ,

Boshi An* ,

Charlie Tianyue Cheng* ,

Haozhe Lou ,

Peihao Li ,

Yen-Jen Wang ,

Yutong Liang ,

Dylan Goetting ,

Chaoyi Xu ,

Haozhe Chen ,

Yuxi Qian ,

Yiran Geng ,

Jiageng Mao ,

Weikang Wan ,

Mingtong Zhang ,

Jiangran Lyu ,

Siheng Zhao ,

Jiazhao Zhang ,

Jialiang Zhang ,

Chengyang Zhao ,

Haoran Lu ,

Yufei Ding ,

Ran Gong ,

Yuran Wang ,

Yuxuan Kuang ,

Ruihai Wu ,

Baoxiong Jia ,

Carlo Sferrazza ,

Hao Dong ,

Siyuan Huang✉ ,

Yue Wang✉ ,

Jitendra Malik✉ ,

Pieter Abbeel✉ .

Robotics Science and Systems (RSS) 2025 ( RoboGen@IROS2 2025 Best Open-source Award ) (* indicates equal contribution. ✉ indicates corresponding author.)

Buliding Interactable Replicas of Complex Articulated Objects via Gaussian Splatting International Conference on Learning Representations (ICLR) 2025 (* indicates equal contribution.)

Closed-Loop Open-Vocabulary Mobile Manipulation with GPT-4V International Conference on Robotics and Automation (ICRA) 2025 (* indicates equal contribution. ✉ indicates corresponding author.)

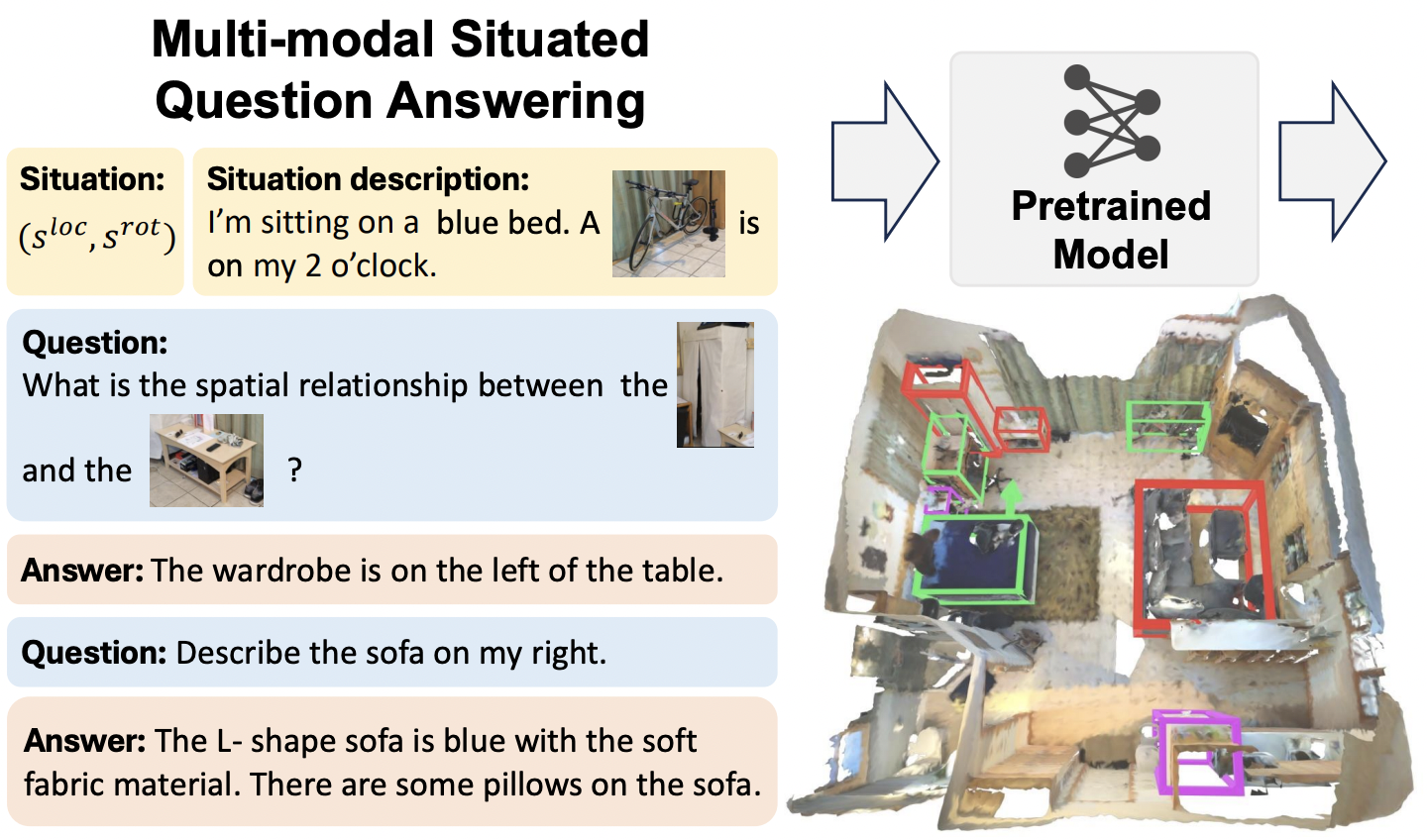

MSR3D: Multi-modal Situated Reasoning in 3D Scenes Advances in Neural Information Processing Systems (NeurIPS) 2024 (* indicates equal contribution. ✉ indicates corresponding author.)

SceneVerse: Scaling 3D Vision-Language Learning for Grounded Scene Understanding European Conference on Computer Vision (ECCV) 2024 OpenSUN3D @ ECCV 2024 (* indicates equal contribution.)

SlotLifter: Slot-guided Feature Lifting for Learning Object-centric Radiance Fields International Conference on Learning Representations (ICLR) 2023 Wild3D @ ECCV 2024 (* indicates equal contribution.)

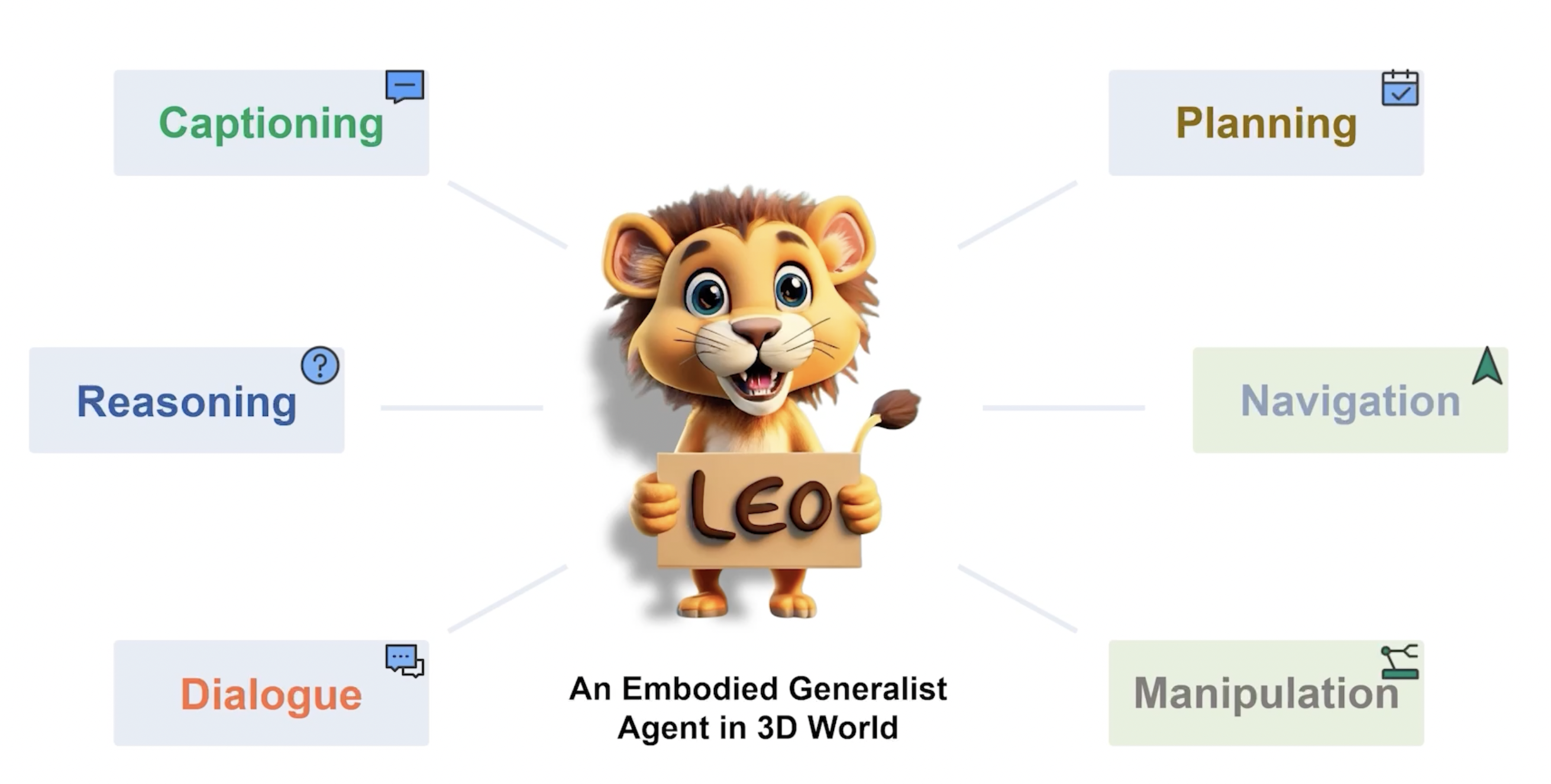

An Embodied Generalist Agent in 3D World International Conference on Machine Learning (ICML) 2024 GenAI4DM & AGI @ ICLR 2024 (* indicates equal contribution.)

PhyScene: Physically Interactable 3D Scene Synthesis for Embodied AI IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2024 ( Highlight ) AI3DG @ CVPR 2024 (* indicates equal contribution.)

Move as You Say, Interact as You Can: Language-guided Human Motion Generation with Scene Affordance IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2024 ( Highlight ) HuMoGen @ CVPR 2024